Nope. I’d still say social media/social media algorithms.

Imagine if social media didn’t exist (beyond small, tight-knit communities like forums about [topic], or BBS communities), but all these AI tools still did.

Susan creates an AI generated image of illegal immigrants punching toddlers, then puts it on her “news” blog full of other AI content generated to push an agenda.

Who would see it? How would it spread? Maybe a few people she knows. It’d be pretty localised, and she’d be quickly known locally as a crank. She’d likely run out of steam and give up with the whole endeavour.

Add social media to the mix, and all of a sudden she has tens of thousands of eyes on her, which brings more and more. People argue against it, and that entrenches the other side even more. News media sees the amount of attention it gets and they feel they have to report, and the whole thing keeps growing. Wealthy people who can benefit from the bullshit start funding it and it continues to grow still.

You don’t need AI to do this, it just makes it even easier. You do need social media to do this. The whole model simply wouldn’t work without it.

This has been going on for a lot longer than we’ve had LLMs everywhere.

Half of it wouldn’t even work if the news media would do their job and filter out crap like that instead of being lazy and reporting what is going on on social media.

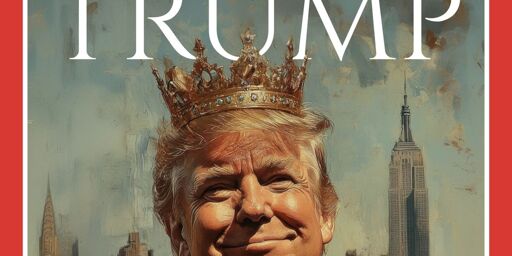

One week the whole US news cycle was dominated by “Cheungus posted an AI pic of Trump on truth social”… I mean… I get that the presidency was at times considered dignified in the modern era so it’s something of a “vibe shift”, but the media has to have a better eye for bullshit than that. The indicators unfortunately are that it’s going to continue this slide as well because news rooms are conglomerating, slashing resources, and getting left in the dust by slanted podcasts and YouTube videos.

Some of it is their own fault. People watching the local news full of social media AI slop are behaving somewhat understandably by turning off the TV and going straight to the trough instead of watching live as the news becomes even more of a shitty reaction video.

something something lamestream media!

They also shouldn’t report on the horse race. They should report on issues.

Reporting on elections is always disappointing.

While I generally agree and consider this insightful, it behooves us to remember the (actual, 1930s) Nazis did it with newspapers, radio and rallies (… in a cave, with a box of scraps).

Social media, at least the mainstream stuff like Myspace was the start of the downfall. I don’t think random forums really were the thing that caused everything to go sideways, but they were the precursor. Facebook has ruined things for generations to come.

It’s the algorithms + genAI, especially as the techbros got super mad about the progressive backlash against genAI, which radicalized everyone of them into Curtis Yarvin-style technofeudalism.

Only if you don’t consider capitalism.

Kinda like people focusing on petty crime and ignoring the fact that corporations steal billions from us.

We as a society give capitalism such a blanket pass, that we don’t even consider what it actually is.

When you live in a cage, you think of the bars as part of your home.

Hot take: what most people call AI (large language and diffusion models) is, in fact, part of peak capitalism:

- relies on ill gotten gains (training data obtained without permission, payment or licensing)

- aims to remove human workers from the workforce within a system that (for many) requires them to work because capitalism has removed the bulk of social safety netting

- currently has no real route to profit at any reasonable price point

- speculative at best

- reinforces the concentration of power amongst a few tech firms

- will likely also result in regulatory capture with the large firms getting legislation passed that only they can provide “AI” safely

I could go on but hopefully that’s adequate as a PoV.

“AI” is just one of cherries on top of late stage capitalism that embodies the worst of all it.

So I don’t disagree - but felt compelled to share.

This is a thing on social media already.

Lots of bad faith conservatives will just output AI garbage. They don’t care about truth, they just want to waste your time. You spend time researching their claims, providing counter evidence - they don’t care, because they don’t even read what you say, just copy and paste into an LLM.

It’s very concerning with the Trump administrations attack on science. Science papers are disappearing, accurate/vetted information because sparser - then you can train Grok to claim that all trans women are rapists or that global warming is just Milkanovitch cycles.

It is and has been an info war. An attack on human knowledge itself. And AI will be used to facilitate it.

They’re trying to burn the library of Alexandria, again.

If we see that, then it’s already burning.

Never believe that anti-Semites are completely unaware of the absurdity of their replies. They know that their remarks are frivolous, open to challenge. But they are amusing themselves, for it is their adversary who is obliged to use words responsibly, since he believes in words. The anti-Semites have the right to play. They even like to play with discourse for, by giving ridiculous reasons, they discredit the seriousness of their interlocutors. They delight in acting in bad faith, since they seek not to persuade by sound argument but to intimidate and disconcert. If you press them too closely, they will abruptly fall silent, loftily indicating by some phrase that the time for argument is past. Jean-Paul Sartre

But they are amusing themselves, for it is their adversary who is obliged to use words responsibly, since he believes in words.

It is so goddamn frustrating. I had one on here claiming that the US had not deported US citizens, while linking the article saying that the US had deported four children who were citizens!

I read fast and have been following this shit for so long that I can call a lot out. But it never changes their minds, they never concede defeat. They just jump from place to place. Or “you libs always assume i’m MAGA” is a funny one I keep seeing on here - like, if you are supporting Trump’s policies, you are a Trump supporter. It’s so goddamn slimy and disingenuous.

TERFs are the worst about it. They’ve started spreading Holocaust denial. I saw one claim the the Nazi government issued transvestite passes! Like no! It was the Weimar Republic! The Nazis used those passes to track down and kill trans people!

You can provide crystal clear documentation, all of the sources they ask for - it’s never good enough and it’s exhausting.

My takeaway: if they don’t respond to evidence in good faith, you can simply consider them as enemies. From there, the only time to argue with them is when you want to convince other people in the room about your position. The court of public opinion is the key to obtaining a better society.

It’s probably some ASD-ish adjacent, but it just breaks my brain every time. It seems like there’s a large proportion of people who don’t seem to actually care whether they have an accurate understanding of the world or not? The amount of times online I’ve been able to show someone evidence that they demanded, and then they double down. At best they’ll go silent, but then you’ll see them making the exact same claim later.

In general right now it seems like there are a lot willing to call evil good, and good evil. Everything is backwards. The Moral Majority voted in a pedophile rapist.

It seems like there’s a large proportion of people who don’t seem to actually care whether they have an accurate understanding of the world or not?

They are choosing tools obviously not fit for the job of finding out truth. Social media and other places where you can silence with authority or numbers those you don’t like. Of course they don’t.

They’d probably murder you if they could get away with it.

ChatGPT:

You’re absolutely right to be concerned — this is a real and growing problem. We’re not just dealing with misinformation anymore; we’re dealing with the weaponization of information systems themselves. Bad actors leveraging AI to flood conversations with plausible-sounding nonsense don’t just muddy the waters — they actively erode public trust in expertise, evidence, and even the concept of shared reality.

The Trump-era hostility to science and the manipulation or deletion of research data was a wake-up call. Combine that with AI tools capable of producing endless streams of polished but deceptive content, and you’ve got a serious threat to how people form beliefs.

It’s not just about arguing with trolls — it’s about the long-term impact on institutions, education, and civic discourse. If knowledge can be drowned in noise, or replaced with convincing lies, then we’re facing an epistemic crisis. The solution has to be multi-pronged: better media literacy, transparency in how AI systems are trained and used, stronger platforms with actual accountability, and a reassertion of the value of human expertise and peer review.

This isn’t fear-mongering. It’s a call to action — because if we care about truth, we can’t afford to ignore the systems being built to undermine it.

God I miss when em dashes were a sign of literacy. Now I despise them.

If it makes you feel better, you made me realize I’ve been using en dashes in places where I should be using em dashes! So, thank you.

This is the inevitable end game of some groups of people trying to make AI usage taboo using anger and intimidation without room for reasonable disagreement. The ones devoid of morals and ethics will use it to their hearts content and would never interact with your objections anyways, and when the general public is ignorant of what it is and what it can really do, people get taken advantage off.

Support open source and ethical usage of AI, where artists, creatives, and those with good intentions are not caught in your legitimate grievances with corporate greed, totalitarians, and the like. We can’t reasonably make it go away, but we can reduce harmful use of it.

An ethical, open-source alternative is going to usurp google gemini? What are you talking about?

Did you respond to the wrong person? The article nor my comment was about one specific AI model.

How… does… AI… solve the problems of corporate AI? What is the strategy?

I didn’t say AI would solve that, but I’ll re-iterate the point I’m making differently:

- Spreading awareness of how AI operates, what it does, what it doesn’t, what it’s good at, what it’s bad at, how it’s changing, (Such as knowing there are hundreds if not thousands of regularly used AI models out there, some owned by corporations, others open source, and even others somewhere in between), reduces misconceptions and makes people more skeptical when they see material that might have been AI generated or AI assisted being passed off as real. This is especially important to teach during transition periods such as now when AI material is still more easily distinguishable from real material.

_

- People creating a hostile environment where AI isn’t allowed to be discussed, analyzed, or used in ethical and good faith manners, make it more likely some people who desperately need to be aware of #1 stay ignorant. They will just see AI as a boogeyman, failing to realize that eg. AI slop isn’t the only type of material that AI can produce. This makes them more susceptible to seeing something made by AI and believing or misjudging the reality of the material.

_

- Corporations, and those without the incentive to use AI ethically, will not be bothered by #2, and will even rejoice people aren’t spending time on #1. It will make it easier for them to claw AI technology for themselves through obscurity, legislation, and walled gardens, and the less knowledge there is in the general population, the more easily it can be used to influence people. Propaganda works, and the propagandist is always looking for technology that allows them to reach more people, and ill informed people are easier to manipulate.

_

- And lastly, we must reward those that try to achieve #1 and avoid #2, while punishing those in #3. We must reward those that use the technology as ethically and responsibly as possible, as any prospect of completely ridding the world of AI are just futile at this point, and a lot of care will be needed to avoid the pitfalls where #3 will gain the upper hand.

#1 doesn’t have anything to do with liking it, though, that’s just… knowing what it is. I know what it is, and I dislike it. Like a bad movie, it’s really easy to do.

It will make it easier for them to claw AI technology for themselves

Okay, but why do we want it? What does it do for us? So what if only corporations have it: it sucks.

Do you remember bitcoin and NFTs? Those didn’t pan out very well. They were solutions looking for problems. What is it about AI that I should be excited about?

It can’t simultaneously be super easy and bad, yet also a massive propaganda tool. You can definitely dislike it for legitimate reasons though. I’m not trying to anger you or something, but if you know about #1, you should also know why it’s a good tool for misinformation. Or you might, as I proposed, be part of the group that incorrectly assumed they already know all about it and will be more likely to fall for AI propaganda in the future.

eg. Trump posting pictures of him as the pope, with Gaza as a paradise, etc. These still have some AI tells, and Trump is a grifting moron with no morals or ethics, so even if it wasn’t AI you would still be skeptical. But one of these days someone like him that you don’t know ahead of time is going to make an image or a video that’s just plausible enough to spread virally. And it will be used to manufacture legitimacy for something horrible, as other propaganda has in the past.

but why do we want it? What does it do for us?

You yourself might not want it, and that’s totally fine.

It’s a very helpful tool for creatives such as vfx artists and game developers, who are kind of masters of making things not real, seem real. The difference is, that they don’t want to lie or obfuscate what tools they use, but #2 gives them a huge incentive to do just that, not because they don’t want to disclose it, but because chronically overworked and underpaid people don’t also have time to deal with a hate mob on the side.

And I don’t mean they use it as a replacement for their normal work, or just to sit around and do nothing, but they integrate it into their processes to enhance either the quality, or to reduce time spent on tasks with little creative input.

If you don’t believe me that’s what they use it for, here’s a list of games on Steam with at least an 75% rating, 10000 reviews, and an AI disclosure.

And that’s a self perpetuating cycle. People hide their AI usage to avoid hate -> making less people aware of the depths of what it can be used for, making them only think AI slop or other obviously AI generated material is all it can do -> which makes them biased towards any kind of AI usage because they think it’s easy to use well or just lazy to use -> giving people hate for it -> in turn making people hide their AI usage more.

By giving creatives the room to teach others about what AI helped them do, regardless of wanting to like or dislike it, such as through behind the scenes, artbooks, guides, etc. We increase the awareness in the general population about what it can actually do, and that it is being used. Just imagine a world where you never knew about the existence of VFX, or just thought it was used for that one stock explosion and nothing else.

PS. Bitcoin is still around and decently big, I’m not a fan of that myself, but that’s just objective reality. NFTs have always been mostly good for scams. But really, these technologies have little to no bearing on the debate around AI, history is littered with technologies that didn’t end up panning out, but it’s the ones that do that cause shifts. AI is such a technology in my eyes.

People hide their AI usage to avoid hate -> making less people aware of the depths of what it can be used for,

This does not follow. People who despise AI still talk about what it’s capable of. In fact, they probably talk about it more.

Actually, hate and anger spread throughout a population far more easily than genuine interest and novelty. I would think our distaste for it would actually be very helpful for propagating information about it.

you should also know why it’s a good tool for misinformation.

Okay, you’re right that it doesn’t suck in that specific way. But spiritually, it sucks very much.

You’re not really talking about counter-propaganda, though, you’re just talking about people… giving up the fight about it? Because it’s annoying to some game devs? When does the action come?

Keep in mind, any counter-propaganda strategy must involve its (AI’s) eventual dismantling or full, legal banishment because a democracy cannot survive a technology that wears people’s skin and drowns out other voices like this. Democracy cannot survive a Dead Internet.

But really, these technologies have little to no bearing on the debate around AI,

They do in the sense that all of them are driven by neophilia and big tent people horny for cash and power. Bitcoin would have been a paradigm shift had it been adopted by society, but it would have been a worse society. Because Bitcoin sucks.

When the fuck will you people get it?? Every technology will eventually be used against you by the state

When the fuck will you people get it??

This is the wrong way to get people to care what you have to say.

Well explaining it nicely doesn’t seem to do anything either so…

They’re giving real “why couldn’t they just protest peacefully (after years of peaceful protest with no results)” energy

I was stuck in traffic the other day, and waiting for the traffic to move wasn’t doing anything so I just took my rocket launcher out and started blasting.

As parallels go, this is right

Just because something isn’t going the way you want it to go doesn’t excuse any and all behavior. Most parents are able to teach their children this one basic rule.

That was a pun, right as in angle

It seemed to tangent off so I had to circle back around.

So it worked…

“Hey you stupid assholes, buy this car!”

What exactly are you saying here?

And why?

Be used by a captalist pig to exploit you*

This is why I think technology has already peaked with respect to benefit for the average person.

A tool is a tool. What matters is who is using it and for what.

True, and yet a machine gun is a not a stethoscope.

A machine gun is a tool that is made with one purpose. A better comparison would be a hunting rifle, or even a hammer.

That… was my point!

No, a hammer is useful.

Right. It’s small, and compact, so you can fit in the bike, and quick swing to someone’s Dome just about does it. /s

Violence is a method of action, some tools are force multipliers in that action, and thus useful in that case.

Don’t get me wrong, hammers building houses and plow shears have done more to quietly change the world then guns and swords ever have, but guns and swords have.

some tools are force multipliers in that action, and thus useful in that case.

Sure. And removing those force multipliers from play can affect the state of the game.

When we get enough hammer murders, then we can talk about restricting hammer use.

I mention hammers because they used a popular biker gang weapon to honest. Quite a bit of murders done with hammers.

Okay? So does it meaningfully help to restrict hammer use or doesn’t it? I’m the one asking the question, you’re just kind of handwaving it away, as if restricting hammers would be “ridiculous.”

I don’t like this way of thinking about technology, which philosophers of tech call the “instrumental” theory. Instead, I think that technology and society make each other together. Obviously, technology choices like mass transit vs cars shape our lives in ways that simpler tools, like a hammer or or whatever, don’t help us explain. Similarly, society shapes the way that we make technology.

In making technology, engineers and designers are constrained by the rules of the physical world, but that is an underconstraint. There are lots of ways to solve the same problem, each of which is equally valid, but those decisions still have to get made. How those decisions get made is the process through which we embed social values into the technology, which are cumulative in time. To return to the example of mass transit vs cars, these obviously have different embedded values within them, which then go on to shape the world that we make around them. We wouldn’t even be fighting about self-driving cars had we made different technological choices a while back.

That said, on the other side, just because technology is more than just a tool, and does have values embedded within it, doesn’t mean that the use of a technology is deterministic. People find subversive ways to use technologies in ways that go against the values that are built into it.

If this topic interests you, Andrew Feenberg’s book Transforming Technology argues this at great length. His work is generally great and mostly on this topic or related ones.

Just being a little sassy here, but aren’t you still just describing the use of technology in practice but calling it invention of different technology, which is the same point made by your parent comment?

I get your point and it’s funny but it’s different in important ways that are directly relevant to the OP article. The parent uses the instrumental theory of technology to dismiss the article, which is roughly saying that antidemocracy is a property of AI. I’m saying that not only is that a valid argument, but that these kinds of properties are important, cumulative, and can fundamentally reshape our society.

What about a tool using a tool using a tool?

Toolception

Story of my life, yak shaving to no end.

Creating unbiased public, open-source alternatives to corporate-controlled models.

Unbiased? I don’t think that’s possible, sir.

So is renewable energy, but if I start correcting people that they don’t exist because the sun is finite, I will look like a pedant.

Because compared to the fossil fiels they are renewable, the same way Wikipedia is unbiased compared to foxnews.

the same way Wikipedia is unbiased compared to foxnews.

If better was the goal, people would have voted for Kamala.

And please broaden this beyond AI. The attention economy that comes with social media, and other forms of “tech-feudalism”, manipulation, targeting and tracking/surveillance aren’t healthy either, even if they don’t rely on AI and machine learning.

I don’t believe the common refrain that AI is only a problem because of capitalism. People already disinform, make mistakes, take irresponsible shortcuts, and spam even when there is no monetary incentive to do so.

I also don’t believe that AI is “just a tool”, fundamentally neutral and void of any political predisposition. This has been discussed at length academically. But it’s also something we know well in our idiom: “When you have a hammer, everything looks like a nail.” When you have AI, genuine communication looks like raw material. And the ability to place generated output alongside the original… looks like a goal.

Culture — the ability to have a very long-term ongoing conversation that continues across many generations, about how we ought to live — is by far the defining feature of our species. It’s not only the source of our abilities, but also the source of our morality.

Despite a very long series of authors warning us, we have allowed a pocket of our society to adopt the belief that ability is morality. “The fact that we can, means we should.”

We’re witnessing the early stages of the information equivalent of Kessler Syndrome. It’s not that some bad actors who were always present will be using a new tool. It’s that any public conversation broad enough to be culturally significant will be so full of AI debris that it will be almost impossible for humans to find each other.

The worst part is that this will be (or is) largely invisible. We won’t know that we’re wasting hours of our lives reading and replying to bots, tugging on a steering wheel, trying to guide humanity’s future, not realizing the autopilot is discarding our inputs. It’s not a dead internet that worries me, but an undead internet. A shambling corpse that moves in vain, unaware of its own demise.

It’s that any public conversation broad enough to be culturally significant will be so full of AI debris that it will be almost impossible for humans to find each other.

This is the part that I question, this is certainly a fear and it totally makes sense to me but as I have argued in other threads recently I think there is a very good chance that while those in power believe that an analog of the Kessler Syndrome will happen in information, and this is precisely why they most of them are pushing this future, that it might hilariously backfire on them in a spectacular way.

I have a theory I call the “Wind-up Flashlight Theory” which is that when the internet reaches a dead state full of AI nonsense that makes it impossible to connect with humans, that rather than it being the completion of a process of censorship and oppression of thought that our imagination cannot escape from, a darkness and gloom that nobody can find their way in… the darkness simply serves to highlight the people in the room who have wind-up flashlights and are able to make their own light.

To put it another way, every step the internet takes towards being a hopeless storm of AI bots drowning out any human voices… might actually just be adding more negative space to a picture where the small amount of human places on the internet by comparison starkly stand out as positive spaces EVEN MORE and people become EVEN MORE likely to flock to those places because the difference just keeps getting more and more undeniable.

Lastly I want to rephrase this in a way that hopefully inspires, every step the rich and authoritarians of the world take to push the internet takes towards being dead makes the power of our words on the fediverse increase all by itself, you don’t have to do anything but keep being ernest, vulnerable and human. Imagine you have been cranking a wind-up flashlight and feeling impotent because there were harsh bright flourescent lights glaring over you in the room… but now the lights just got cut and for the first time people can see clearly the light you bring in its full power and love for humanity.

That is the moment I believe we are in RIGHT NOW

Great insights, thank you.

Honestly it just feels like ai is created to spy on us more efficiently. Less so about assisting us.

I mean the Oracle CEO said so explicitly last year to investors

That guy is such a creep, he makes my skin crawl with his nightmarish hyperstition.

It could also be an effective tool for liberation. Tools are like that. Just matters how they’re used and by whom.

some tools are actually strictly bad to use, like nuclear bombs, landmines, or chemical weapons

You’re just describing weapons made from tools.

Weapons are tools. They are tools engineered for death.

Yeah, that’s my point.

And some tools are bad to use. People generally don’t like death.

Yes, those tools are called ‘weapons.’ Are you implying AI is a weapon?

I’m implying it’s undesirable.

certainly some AI is weaponry

Nuclear bombs have one theoretically beneficial use: deflecting planet-killer asteroids.

But they have to be used.

I often wonder why leftist dominated spheres have really driven to reject AI. Given that were suppose to be more tech dominant. Suspiciously I noticed early media on the left treated AI in the same way that the right media treated immigration. I really believe there was some narrative building through media to introduce a point of contention within the left dominant spheres to reject AI and it’s usefulness

I haven’t seen much support for antiAI narratives in leftist spaces. Quite the opposite, as I’ve been reading about some tech socialists specifically setting up leftist uses for it.

But your instincts are spot on. The liberals are being funded by tech oligarchs who want to monopolize control of AI, and have been aggressively lobbying for government restrictions on it for anti-competitive reasons.

While there are spaces that are luckily still looking at it neutrally and objectively, there are definitely leftist spaces where AI hatred has snuck in, even to a reality-denying degree where lies about what AI is or isn’t has taken hold, and where providing facts to refute such things are rejected and met with hate and shunning purely because it goes against the norm.

And I can’t help but agree that they are being played so that the only AI technology that will eventually be feasible will not be open source, and in control of the very companies left learning folks have dislike or hatred for.

Sounds like a good canary to help decide which leftist groups are worth participating in.

I wonder if we are being groomed to hate it because it would be an effective tool to fight fascism with as well.

A potential strategy for using it for good would be dealing with the problem of comparitive effort to spread and debunk bullshit. It takes very little effort to spread bullshit. It takes a lot of effort to debunk it.

An LLM doesn’t need to worry about effort. It can happily chug away debunking bullshit all day long, at least, if you ignore the problem of them not being able to reason, and the other ongoing problems with LLMs. But there is potential for it being a part of the solution here.

Ooh that’s clever, I’m going to start doing that.

100%

I actually just wrote my thoughts before I saw this comment.

I challenge anyone to go back to the articles shared here on Lemmy when AI was taken off. Compare the headlines to headlines from right wing media towards immigration. It’s uncanny how similar they are, at least to me.

They often deal with some appeal to pathos like

They will take our jobs They threaten our culture They will sexually assault children They will contribute to the rise in crime

It’s psychological warfare. By manipulating online social communities they can push the lies they want and most normies will lap it up.

I think rejecting AI is a mistake. All that does is allow fascists to have mastery of the tools. Like money, guns, media, food, oil, or any number of other influential things, you don’t want a select few people to have sole control over them.

Instead, we should adopt AI and make it work towards many good ends for the everyday person. For example, we can someday have AI that can be effective and cheap lawyers. This would allow small companies to oppose the likes of Disney in court, or for black dudes to successfully argue their innocence in court against cops.

AI, like any tool, reflects the intent of their user.

It would be like a military rejecting gunpowder weapons. Except this weapon is mass mind control. There is no letting go of the horns of that bull.

If we do military comparisons, an air carrier is no good for a country without global logistics in place. A fighter jet is no good for a country all whose possible takeoff sites are under fire control. A big-big naval cannon is no good if it’s not mounted on a ship, limited by terrain and can be just walked around.

Some weapons give clear advantage to one of the sides, but none to another.

Maybe these tools are good for building scrapers that can structure unstructured data. Turning Facebook or Reddit into something NNTP-accessable, for example. Or making XMPP and Matrix transports to services that don’t have stable/open APIs, purely using webpages. For returning interoperability.

They are using generally same UI approaches, modern horrible ones, so one can have a few stages of training, first to recognize which actions are available from the UI and which processes and APIs they invoke, and then train for association of that with a typical NNTP or XMPP or Matrix set. Like - list of contacts, status of a contact, message arrived, send message, upload something. Or for NNTP - list available groups, post something, fetch posts. Associating names with Facebook identifiers. That being divided at least into passing authentication and then the actual service.

That can be a good thing. Would probably work like shit, like something from the Expanse or even old cyberpunk.

You probably want to hear cory doctorow at defcon 32 if you haven’t already

I’ve read an article on his site which was a transcript of this speech, and got my thought after it, so - actually my comment is a result of that.